Over the past 6 weeks I’ve been travelling extensively and meeting clients, vendors, and attending various tech and industry conferences and events. I’ve spoken with tech start up founders, CTOs, operations leaders, and data teams across industries. There is a lot of excitement in the space, and at times it feels like the AI sector is moving at the speed of light, and it is very easy to get caught up in the fear of being left behind.

The Generative AI (GenAI) hype seems to be reaching a crescendo. Leaders want copilots, agents, and predictive systems that drive efficiency, productivity and lower costs. But scratch beneath the surface and many organisations are stuck in a pattern of limited use case proofs-of-concepts (PoCs) – not scalable, value-producing technology.

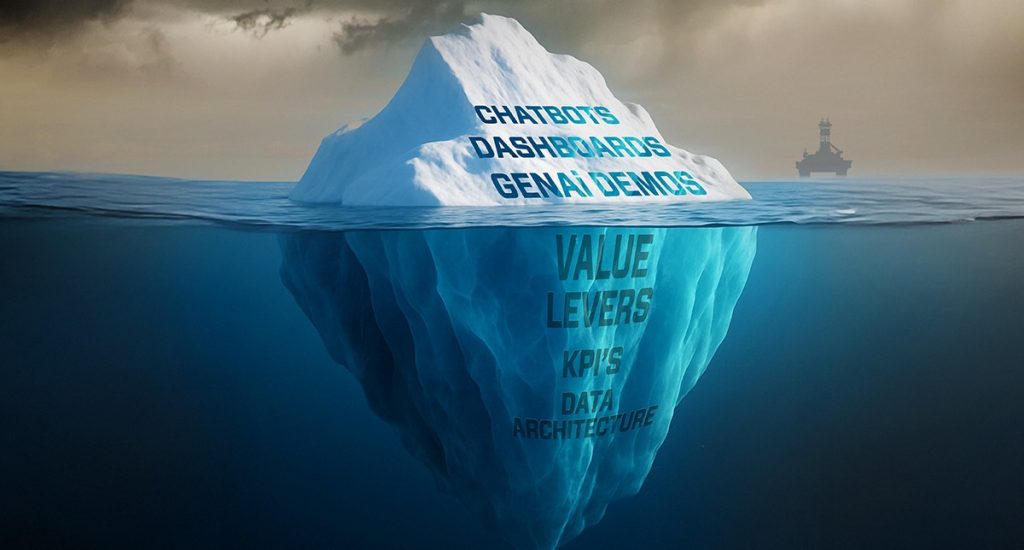

If appears to me the core issue is: in chasing ‘shiny’ GenAI outcomes, companies forget to link the technology to the value levers that drive business goals. The result is impressive outputs with nice visualisations which aren’t anchored to the KPIs that matter – e.g. revenue and growth, cost reduction, asset productivity. Without a clear business case priorities drift and adoption stalls. Start with the lever and KPI, then shape the use case.

Once the business case is defined, another major pitfall that we’ve seen time and time again is a lack of focus and investment in data architecture and quality. It’s unglamorous work, but the organisations that get it right are the ones who have the solid foundations to build AI solutions that have measurable impact.

Across conversations, there seems to be a consistent trend: lots of narrow POCs with minimal impact. Scaling AI for business impact requires the “unglamorous” business case and structural work to be done. The companies realising value aren’t the loudest on stage; they’re the ones treating these foundations as non-negotiable.

If AI disappeared from your business tomorrow, which decisions would slow down or get worse? If you can’t list them, you haven’t crossed from experimentation to impact.

Key questions to consider

AI value isn’t constrained by algorithms or models, it’s constrained by foundations. Build the basics first – a use case linked to the business value levers and a coherent data architecture and quality strategy. Do that, and AI has the best chance of impacting business performance; skip it, and you’ll likely have nice dashboards but limited value creation.

If you’d value a conversation about putting the building blocks in place to maximise returns from your data and AI, I’d welcome the opportunity to connect.

E: Email ADC UK